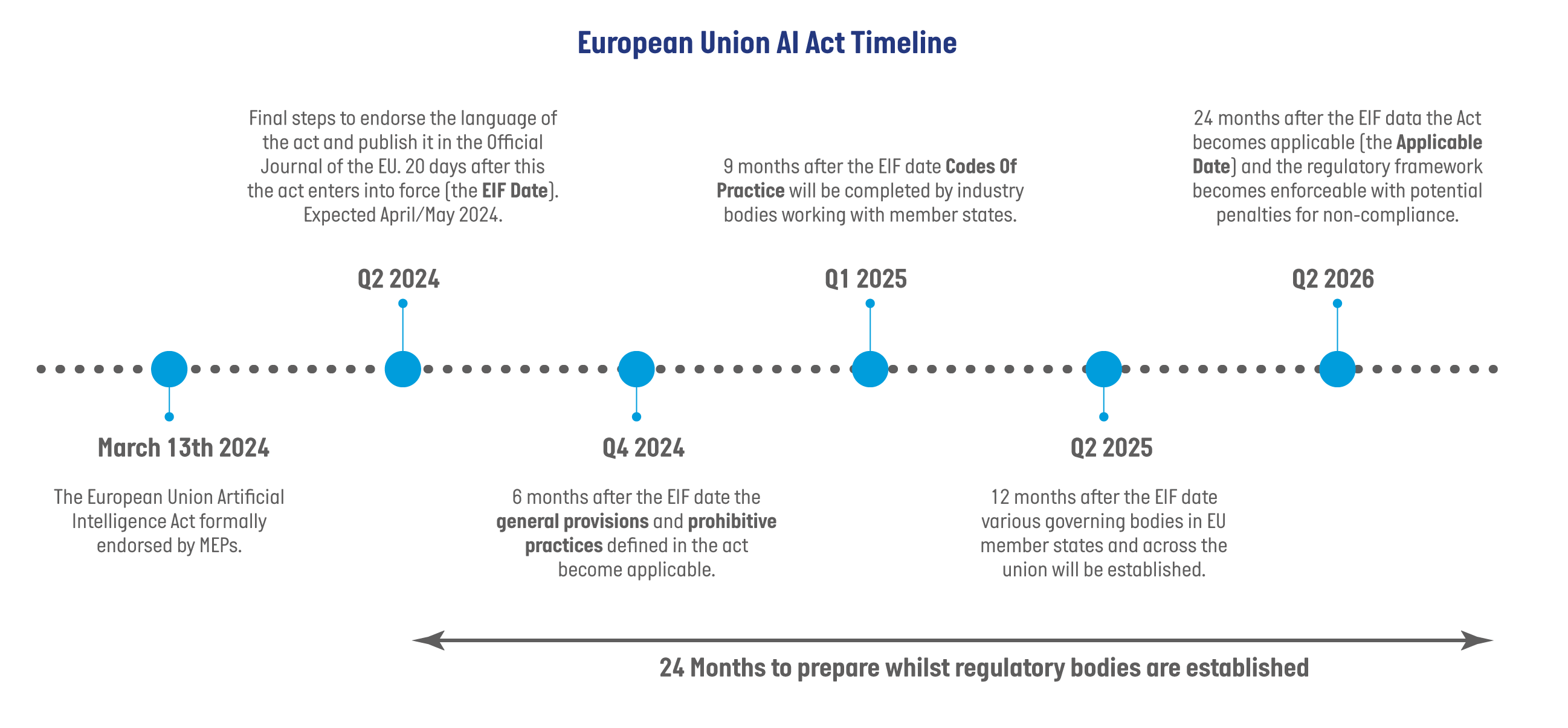

The world’s first piece of regulatory law, The European Union Artificial Intelligence Act was formally endorsed by the Members of the European Parliament by an overwhelming majority (523 votes in favour, 46 votes against, with 43 abstentions). The next steps involve finalising the language and obtaining endorsement for the text . Once endorsed, the act will be published in the official journal of the European Union. Twenty days later the act enters into force (the EIF date) triggering a 2 year countdown for regulatory compliance by organisations producing, distributing, or deploying AI solutions.

There are two important dates that could impact an organisation.

- Q4 2024: Certain AI practices will be prohibited by the act

- Q2 2026: The full act will apply.

Any breaches of the regulations could result in severe penalties:

- Prohibited AI systems violations: Up to €35 million or 7% of annual global turnover.

- Other AI act violations: Up to €15 million or 3% of annual global turnover.

- Incorrect information to AI regulators: Up to €7.5 million or 1.5% of annual global turnover.

However, with the level of public exposure to AI and its real and imagined risks, the potential impact of a regulatory breach could cause major reputational harm that far exceeds the fiscal impacts. The legislation has been drafted to encourage consumer trust in AI and as such it will be in an organisation’s best interests to adopt compliant AI solutions as soon as possible.

As with previous EU legislation such as GDPR, the act will impact both companies within the European Union and those that wish to trade with member states. It is also expected that similar legislation will be introduced globally following the form set by the European Union.

As organisations begin to adopt AI it is critical that they put in place processes and technologies to address the underlying implications of the emerging regulatory space alongside the solutions they deploy.

The EU AI Act Timeline

The first important date for AI adopters will occur six months after the EIF date when the prohibitive practices defined in the act become applicable. This means that some potentially harmful uses of AI that could threaten citizens’ rights will be banned. These include:

- Biometric categorisation systems based on sensitive characteristics and untargeted scraping of facial images from the internet or CCTV footage to create facial recognition databases.

- Emotion recognition in the workplace and schools, social scoring, predictive policing (when it is based solely on profiling a person or assessing their characteristics).

- AI that manipulates human behaviour or exploits people’s vulnerabilities.

There will be exemptions to these for law enforcement with strict guidelines and judicial approval processes put in place.

The second, important date for most organisations, will be 24 months after the EIF date when the Act becomes applicable, and organisations must comply to the regulations or risk penalties.

There will be emerging clarity over the next 24 months as the regulatory bodies and controls are put in place. However, organisations should not wait for this to happen, if they take steps to govern and monitor their AI solutions now, they will be well placed to be compliant once the regulation becomes applicable in 2026.

Key Terminology

The act outlines a number of key terms and definitions around AI:

AI System: A machine-based system designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Or in plain English an application that uses methods such as machine learning or statistics to define predictive or generative outputs.

AI Risk: The combination of the probability of the occurrence of harm from AI and the severity of that harm. This led to four levels of risk being defined, each of which has its own set of regulatory requirements both for providers of the AI system and companies that deploy said system. These risk levels are:

- Unacceptable Risk – AI systems which contravene EU values and the fundamental rights of its citizens. These form the basis of the prohibited list of applications.

- High Risk – AI Systems that are primarily used in critical sectors such as healthcare, managing critical infrastructure, and law enforcement. These systems will be subject to a high level of regulatory oversight and mandated activities by their providers and deployers.

- Limited Risk – AI systems that interact with individuals in use cases or situations not deemed critical. The producers and deployers of these systems will still face regulatory constraints around being able to explain how the AI works and reaches its conclusions and what data has been consumed in training and tuning the AI.

- Low Risk – All other AI solutions

AI Provider: a natural or legal person, public authority, agency or other body that develops an AI system or a general-purpose AI model or that re-sells an AI system or a general purpose AI model developed for them. This will include a lot of Computacenter’s suppliers and potentially also include us when we re-sell on behalf of our suppliers.

AI Deployer: any natural or legal person, public authority, agency or other body using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity. This will include any of our customers who choose to adopt AI solutions as part of their day to day activities and processes.

AI Data: Data underpins AI solutions in a number of ways including:

- Training Data – data used to train the base AI model that underpins the solution.

- Validation Data – data that is independent from the training data that is used to validate the outputs of the trained model.

- Testing Data – data that is used to provide an independent test of the model both for correct response and for bias and ethical considerations.

- Input Data – the inputs to an AI solution that are used by the model to provide a generated output. For example, the prompts input into a chat bot or live fiscal data input into a forecasting model.

What This Means For AI Adopters

AI Regulation is coming and recent surveys indicate that the majority of large enterprises are investigating how AI adoption will help them both run more efficiently and open up new ways of working.

Any organisation producing or deploying high risk AI solutions needs to consider the following regulatory requirements:

- Risk assessment and mitigation

- High quality, well managed data feeding the system

- System documentation and model explainability

- Logging of AI activity and responses

- Clear guidance to the users of the system

- Human oversight to minimise risk

- Robust and secure infrastructure

It is highly recommended that any organisation starting on AI adoption also starts creating the means to regulate and govern their solutions in these areas at the start of their journey.

This will in all likelihood be fulfilled by a mixture of tools, technologies, processes and people, all underpinned by trusted, well managed data.

Caveats

This paper has been produced through monitoring and research around the emerging legislation. As the process of making the Act law continues any organisations that believe they are at regulatory risk should consult a suitable legal body to mitigate their own risk.